- Sat 26 April 2025

- Experiments

- akuz

For years I’ve wanted to build a super-dense electronic-music compressor: keep only the loops and phase cues that really matter, then re-synthesise the track perfectly. Evenings and weekends, however, were never long enough to design the model, write the maths, and wrangle PyTorch. Recently I opened ChatGPT running the new o3 model and treated it as a design partner. If we could keep the conversation focused, perhaps we could sketch—and prototype—the entire idea in a single stretch.

Co-designing the generative model

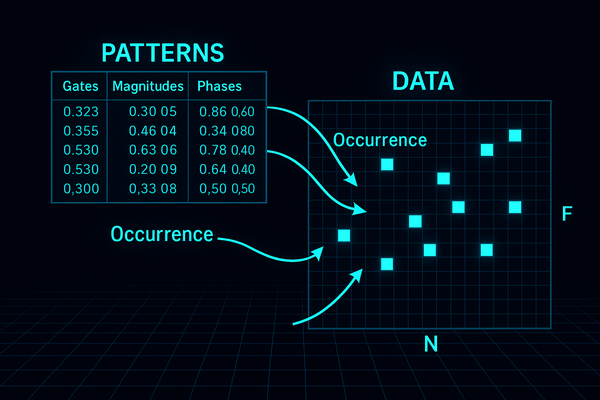

We started by deciding how the data should look. I wanted a phase-aware spectrogram—complex numbers on an

𝐹 × 𝑁 grid—rebuilt from a handful of reusable patterns and a sparse list of occurrences. I proposed details; o3 replied with equations. We swapped 3 × 3 windows for 5 × 5, removed global gains then re-introduced per-occurrence magnitudes, and replaced hard clamping with bilinear interpolation so gradients would flow. After several iterations we froze a checkpoint: unit-normalised patterns, fractional offsets encoded as phases, occurrences positioned by two complex numbers rather than fixed indices. o3 typeset the whole formulation in LaTeX, and I compiled it into a concise PDF.

Implementing—and debugging—the first learning loop

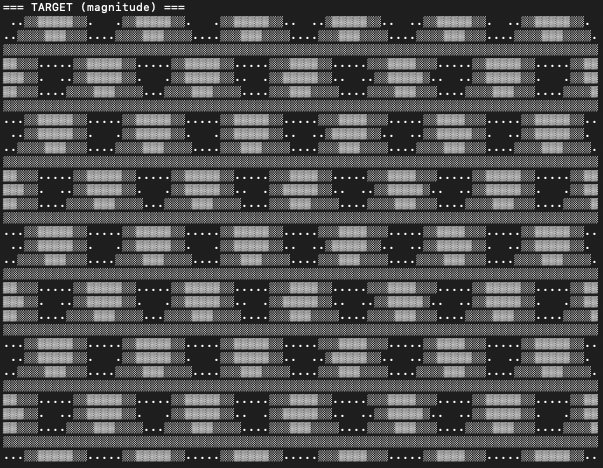

o3 then produced a clean repo: separate modules for patterns, occurrences, a differentiable lattice writer, and a training script. The first run showed falling loss yet every pattern remained zero. In chat we traced the issue to hard gates that silenced magnitudes before gradients could reach them; replacing the mask with soft weights solved the problem immediately, and patterns began to develop non-zero amplitudes and phases. For visibility we added a simple ASCII heat-map that printed the target spectrogram, the reconstruction, and their difference directly in the terminal.

ASCII illustrations for debugging

I initialised the data (grid of complex numbers) to a weavy pattern (ASCII reprentation of the magnitude):

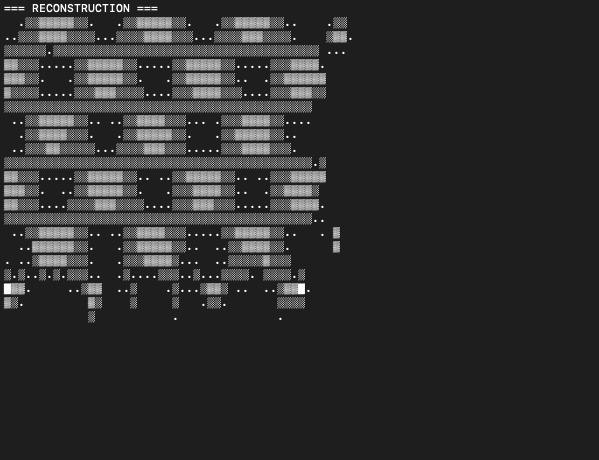

With 5000 occurrences of only 4 patterns, the algorithm was able to compress around 1/3 of the data (obviously the number of occurrences can be increased, but I decided to keep this result so that it shows how this compression is limited by the constraints of the algorithm, namely the number and size of the pattern, and the number of occurrences):

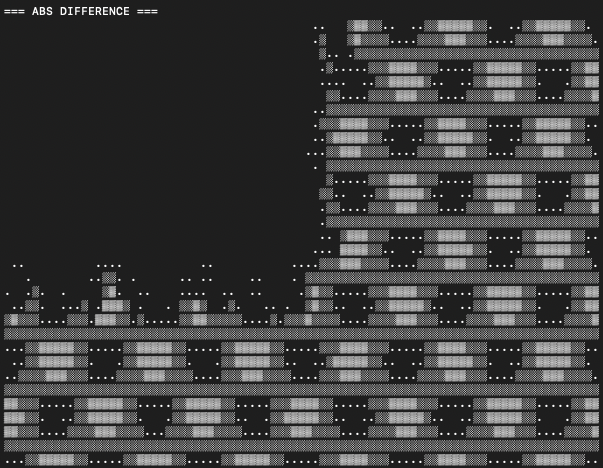

The ASCII imllustration below shows the part of the data that is not described by the algorithm, due to a limited number of patterns and occurrences.

UPDATE 2025/04/25: the incomlete reconstruction of the data was actually caused by a bug in the model, where the pattern occurrences could only be placed in the top left quadrant. This is because occurrence locations are parametrised by two complex numbers with phases (-pi, pi), and they were accidentally centered on the top left corner instead of the centre. I've fixed it in the code on GitHub now, but keeping the blog post the same, just added this update.

One working day later...

By the evening the model could reconstruct a synthetic test grid with a small dictionary and far fewer occurrences than pixels. No extensive design document, no weekend-long coding marathon—just a day of iterative conversation with an AI partner. Next steps are clear: push the code to GitHub, train on real electronic tracks, and measure how low we can take the bitrate.

What makes this prototype different

The crucial detail is that occurrences are not tied to the lattice. Each centre is stored as two unit-complex numbers whose phases map to continuous coordinates, so patterns can be placed anywhere—even between grid cells—while gradients still flow. A single pattern can therefore be reused at arbitrary offsets instead of being cloned for every shift. This first experiment shows that phase-parametrised placement can turn a dense spectrogram into a sparse set of grid-free building blocks, opening the door to extremely compact music compression.

Conclusion

Working with ChatGPT o3 felt like pairing with an always-awake research colleague: every question was answered instantly, every edit compiled on the spot, and roadblocks dissolved in minutes instead of months. An experiment that had lived in my “someday” notebook for years—designing a grid-free, phase-aware music compressor—went from sketch to running prototype in a single day of dialogue and iterative coding. Turning long-standing ideas into tangible results this quickly is both liberating and a glimpse of how research will feel in the very near future. Exciting times!

See github repository here.

Discuss on HN.